How an Artificial Brain Learns: A Journey Inside a Neural Network

Introduction: The Challenge of the "3"

Imagine a simple square, a 28x28 grid containing 784 tiny pixels. On this grid, someone has drawn a number—a handwritten “3.” Some pixels are dark, tracing the digit's looping curves, while others are light, forming the empty space around it.

To a human, recognition is instant. But how do you teach a machine, a collection of circuits and code, to see the essential "three-ness" in this specific pattern of light and shadow? How can it learn to identify a "3" when the next one it sees might be slanted, thicker, or written with a flourish? The cognitive gulf seems immense; the sheer variability of human handwriting presents a nearly infinite number of ways to draw the same digit. The machine can't rely on a rigid template. It must learn the concept of a "3."

The answer lies not in brute-force programming but in a remarkable process of digital evolution inspired by our own brains. It’s a journey of trial and error on a massive scale, a methodical process of guessing, failing, and learning from every single mistake until, slowly but surely, an understanding begins to emerge from the chaos.

The Core Concept: Learning by Minimizing Error

Before diving into the intricate mechanics of an artificial neural network, it's crucial to grasp the single, elegant principle that drives all of its learning. At its heart, a neural network is a mistake-corrector.

It operates on a beautifully simple loop: it makes a guess, it checks how wrong that guess was, and it then slightly adjusts its internal configuration to make a better guess the next time. This entire, often complex, training process can be quantified as a quest to achieve one goal: minimizing the difference, or "Loss," between the network's prediction and the correct answer.

This approach was directly inspired by the biological architecture of the human brain. Pioneers like Warren McCulloch, Walter Pitts, and Frank Rosenblatt modeled the first artificial neurons based on their biological counterparts. Just as our own brain cells strengthen or weaken their connections based on repeated exposure to stimuli, an artificial neural network learns by adjusting the strength of the connections between its own simple processing units.

Now, let's break down this powerful learning cycle into its essential, step-by-step components.

The Deep Dive: The Anatomy of Machine Learning

1. The Blueprint: An Artificial Brain's Structure

The strategic genius of a neural network lies in its architecture. Its layered structure is not arbitrary; it is precisely this design that allows the network to methodically transform raw, meaningless data into a meaningful, high-level understanding.

The network is comprised of three fundamental types of layers:

- The Input Layer: This is the network's sensory organ. It’s where raw data—like the brightness values of every pixel in an image—first enters the system. It simply receives and passes on the initial information.

- The Hidden Layers: This is the network's internal thought process. Often referred to as a "black box," these layers extract increasingly complex features. A network with multiple hidden layers is the foundation of "deep learning."

- The Output Layer: This is the network's voice. After the data has been processed, this layer produces the final result—a prediction, a classification, or a decision.

The Analogy: The Corporate Hierarchy

Think of this structure like a large company. The Input Layer is the mailroom, receiving and sorting all the raw data. The Hidden Layers are the teams of mid-level managers. The first team spots simple things (curves, edges). They pass reports to the next layer, who synthesize these into concepts (loops, stems). This continues up the chain until the final report lands on the CEO's desk. That CEO is the Output Layer, making the ultimate decision ("This image is a '3'") based on the processed intelligence.

To feed our 28x28 pixel image into this system, the grid is "flattened" into a single list of 784 numbers. The brightness value of each pixel becomes the initial activation value for a corresponding neuron in the input layer.

2. The Forward March: How Information Travels

The first phase of any prediction is called the forward pass. This is the network making its initial, uneducated guess. The mechanism is a beautiful cascade of simple mathematics.

Each neuron in a hidden layer receives outputs from the previous layer. It multiplies the input by a weight (importance of the connection), adds a bias (to fine-tune the output), and passes the sum through an activation function (a gatekeeper that decides if the signal is strong enough to pass on).

The Analogy: A Cascade of Faucets

Imagine the network as a complex plumbing system. Each neuron is a faucet. The Weight is a dial on the pipe determining how much water flows. The Bias is a second dial adding extra pressure. The Activation Function is a pressure sensor; it only opens the faucet to the next pipe if the combined pressure is strong enough. The network's final output is the amount of water that makes it to the end.

At the beginning, with random weights, the network's prediction is almost certainly wrong. This leads to the critical question: How wrong is it?

3. The Moment of Truth: Measuring Failure with the Loss Function

To learn, the network needs feedback. This is provided by the Loss Function. It acts as the network's teacher, providing a single score that measures exactly how far off the prediction was from the correct answer. The goal is to make this score as small as possible.

The Analogy: The "Hot or Cold" Game

Think of training like playing "Hot or Cold." The network is blindfolded and trying to find the answer. It takes a step (makes a guess). The Loss Function shouts feedback. If the prediction is way off, it shouts "Ice cold!" (high loss). If it's close, it shouts "Getting warmer!" (low loss). The network's goal is to only take steps that make the teacher shout "Warmer!"

4. Learning from Mistakes: The Genius of Backpropagation

Backpropagation is the engine of learning. It is the moment the network analyzes its failure and figures out who to blame.

After the Loss Function calculates the error, backpropagation works backward from the output to the input. Using calculus, it calculates how much each individual weight and bias contributed to the final error. It assigns "blame" to the specific connections that led to the wrong answer.

The Analogy: A Team Debrief

Imagine a project manager analyzing a failed project. She starts at the end and works backward. She asks the final person, "Why did this fail?" That person points to faulty info from the previous person, and so on. By the end, the manager knows precisely which decisions (weights) made by which team members (neurons) caused the failure.

5. The Slow Descent: How the Network Improves

Gradient Descent is the algorithm that translates the feedback from backpropagation into concrete improvements. It systematically adjusts the weights to minimize the loss.

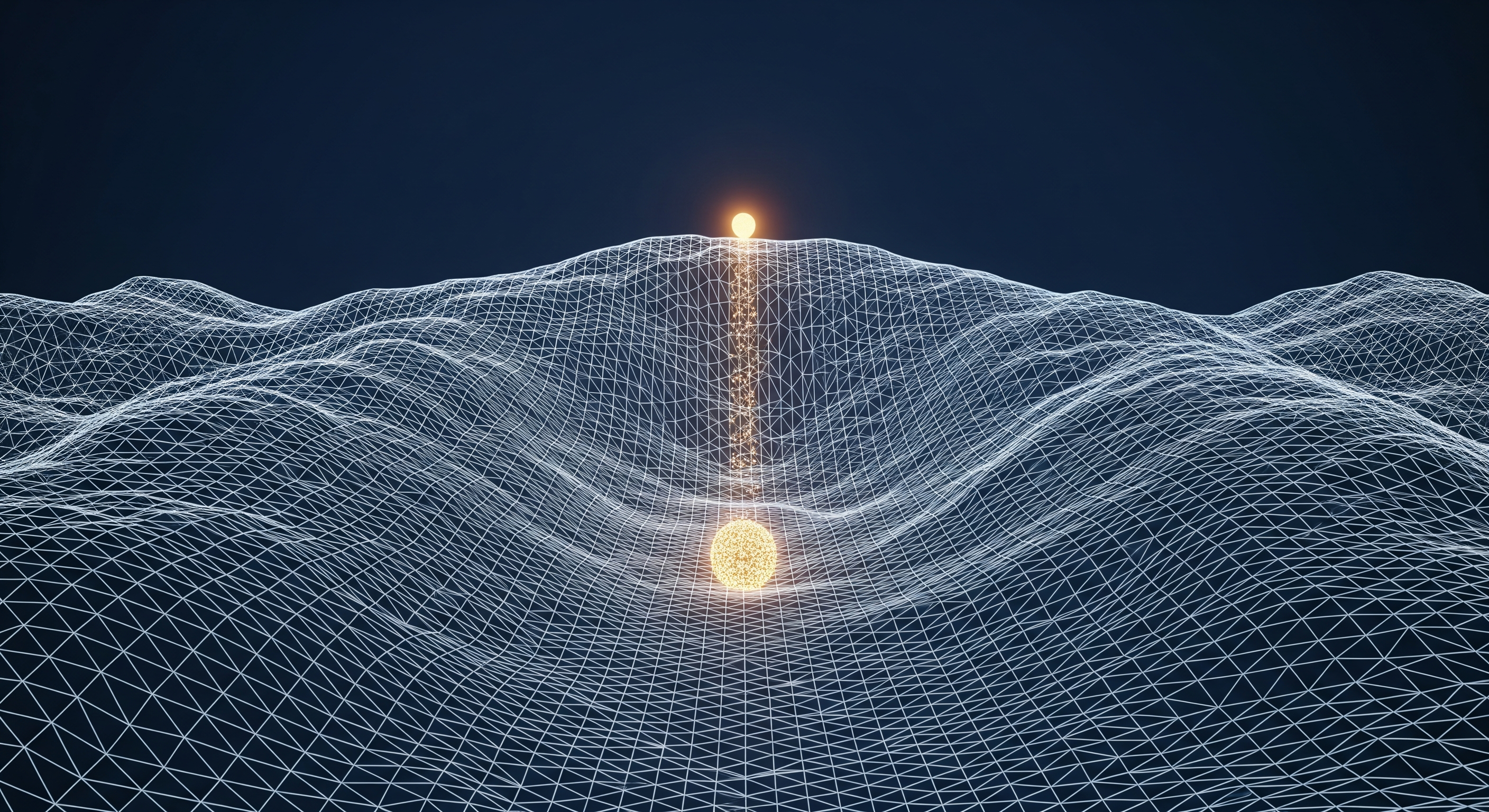

Imagine the loss as a landscape of hills and valleys. The bottom of the deepest valley is the "perfect" state.

- If the slope is negative, the algorithm adds to the weights to step down.

- If the slope is positive, it subtracts from the weights.

It repeats this process, taking small steps (determined by the Learning Rate) down the steepest slope, slowly descending toward the point of minimum error.

The Walkthrough: A Digit's Journey to Recognition

Let's walk through a single learning cycle for our handwritten digit task.

- Step 1: The Input. A handwritten '3' is flattened into 784 numbers and fed into the input layer.

- Step 2: The First Guess. The signal cascades forward. Since the network is untrained, it guesses '8'.

- Step 3: Calculating Error. The Loss Function compares '8' with the correct label '3' and returns a high error score.

- Step 4: Assigning Blame. Backpropagation sends the error backward, identifying which weights mistakenly activated the '8' neuron.

- Step 5: Making Adjustments. Gradient Descent slightly weakens the weights that led to '8' and strengthens those that point to '3'.

- Step 6: Repeat. This is one "epoch." The network repeats this thousands of times until it reliably recognizes a '3'.

The ELI5 Dictionary: Key Terms Demystified

-

Neuron (or Node)

- The Definition: The basic computational unit that receives inputs, calculates, and produces an output.

- Think of it as... A single musician in an orchestra, who only plays their note when they get the right cue.

-

Weights

- The Definition: A number controlling the strength of the connection between two neurons.

- Think of it as... The volume dial on a connection. A higher weight means the signal is "louder" and more influential.

-

Bias

- The Definition: An extra parameter added to the input sum to shift the output.

- Think of it as... A "head start" for a neuron, allowing it to fire even if inputs are weak.

-

Activation Function

- The Definition: A formula deciding whether a neuron should "fire."

- Think of it as... The "on/off" switch. It only passes the signal if it's strong enough to flip the switch.

-

Loss Function

- The Definition: A method of calculating the difference between the prediction and the actual answer.

- Think of it as... A video game score telling you how far you are from the goal. You want this score to be zero.

-

Backpropagation

- The Definition: The algorithm for calculating how much each weight contributed to the error.

- Think of it as... Rewinding a game tape to see exactly which player made the mistake.

-

Gradient Descent

- The Definition: An optimization algorithm used to minimize the loss function.

- Think of it as... Walking down a hill blindfolded. You feel the slope with your feet and always step downwards.

Conclusion: From Simple Rules to Complex Intelligence

The journey of how an artificial brain learns is a testament to the power of simple rules applied at a massive scale. A forward pass makes an initial guess. A loss function quantifies how wrong that guess is. Backpropagation assigns blame for the error. And gradient descent makes tiny corrections.

This iterative loop of error minimization, repeated millions of times, is what allows a neural network to go from a state of random chaos to a highly specialized expert. Though the training process is computationally expensive, its ultimate gift is the final, "trained" model. This file is more than just code; it is a captured, crystallized form of expertise—a piece of artificial intuition, ready to be deployed instantly to solve problems anywhere in the world.