From Pixels to Perfection: The Hidden Science of AI Background Removal

Introduction: The Common Frustration and the Digital Promise

We’ve all been there. You capture a moment that’s almost perfect—a sharp, smiling portrait, a dynamic product shot, or a cherished family photo. But something is off. The background is cluttered with the distracting "hustle and bustle of the city," a dull and "overcast day" washes out the mood, or the setting simply doesn't align with the professional aesthetic you need.

For years, fixing this meant hours of tedious, meticulous work with complex software, a task reserved for skilled graphic designers. This universal frustration of having a great photo held hostage by its surroundings is a familiar one. Today, however, a single click in an app can achieve what once seemed like digital magic, instantly isolating a subject from its environment.

But what is really happening in the seconds between your click and the final, flawless image? How does a machine learn to see a photo not as a flat image, but as a world of distinct objects, and then perform a disappearing act on everything you don't want?

The Core Concept: A Digital Disappearing Act

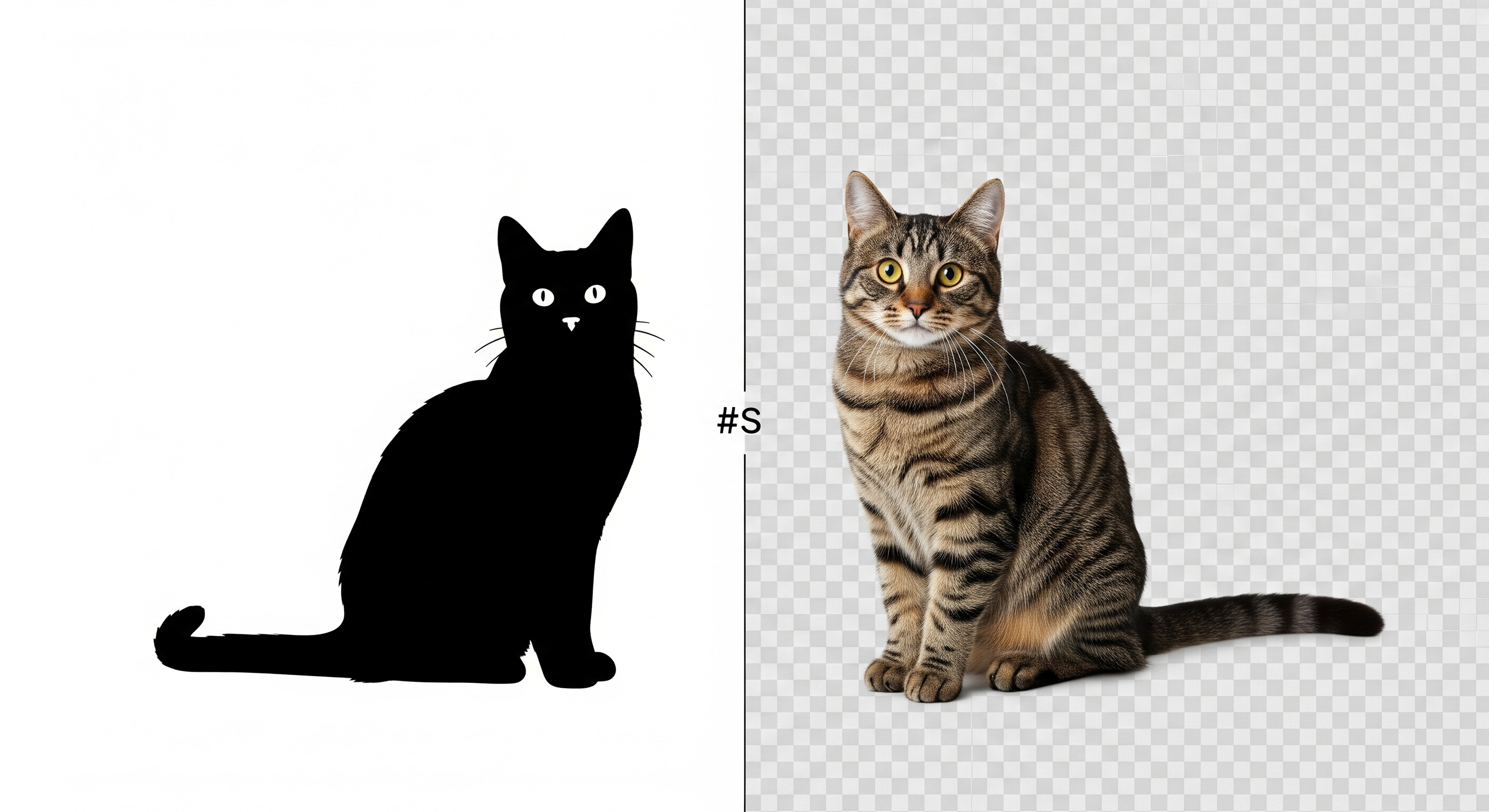

Before diving into the complex machinery, it's crucial to understand the fundamental principle at work. So, how does AI background removal actually work? At its core, the technology uses a highly trained artificial intelligence to perform a series of sophisticated steps with incredible speed.

First, it analyzes an image by breaking it down into its smallest components—pixels. Then, leveraging its training on millions of other images, it meticulously distinguishes the main subject (like a person, an animal, or a product) from everything surrounding it. Finally, it creates a precise digital outline and digitally separates the two, leaving you with a clean, isolated subject.

The Analogy: The Digital Artist

Think of it as an impossibly fast and precise digital artist who can see the exact boundary of any object and cut it out with perfect accuracy in the blink of an eye.

Now, let's explore the sophisticated mechanisms that make this seemingly magical process possible.

The Deep Dive: Inside the Mind of the Machine

To truly appreciate this technology, we must go beyond the surface. A simple click triggers a complex cascade of events inside the AI's "mind." It’s a collaborative effort between different layers of artificial intelligence, each with a specialized role, working together to deconstruct, understand, and rebuild your image.

Thinking in Pixels: How AI First Sees Your Photo

The AI’s first step is to forget what a "photo" is and deconstruct it into a language it understands: data. It views your image not as a cohesive picture but as a vast grid of tiny pixels, meticulously examining each one to group them by attributes like "specific colours, patterns, and other attributes."

On a microscopic level, each pixel is a data point containing specific information about color and brightness. The AI's critical task is to analyze the relationships between millions of these adjacent data points, searching for "cliffs"—the sudden, dramatic changes in data that signal the end of one object and the beginning of another. A sharp shift from the dark data of a blue jacket to the bright data of a white wall is a clear indicator of an edge.

The Analogy: The Mosaic Artist

This process is akin to a master mosaic artist standing before a wall of millions of colored tiles. They don't see the final picture at first; they see individual pieces, and by carefully grouping tiles of similar color and texture, the larger image and its distinct subjects begin to emerge.

The Digital Brain: Training an AI to Understand the World

The "brains" of the operation are sophisticated Deep Learning Models, particularly a specialized type known as Convolutional Neural Networks (CNNs). These aren't simple programs following rigid instructions; they are complex systems that have been "trained on millions of images."

This extensive training teaches the AI to generalize and comprehend what constitutes "foreground and background," learning to recognize "human figures and common objects," identify "complex textures," and even perceive incredibly "fine details like hair and transparent items." This multi-layered analysis is precisely what was missing from older 'magic wand' tools in photo software, which would notoriously fail around hair and complex edges.

The Analogy: The Brilliant Detective

A Deep Learning Model is like a brilliant detective who has spent a lifetime studying millions of case files. When presented with a new crime scene (your image), this detective can instantly identify the key subject and separate it from the background noise because they have seen countless variations before. The CNNs act as the detective's specialized magnifying glass, allowing them to see the finest details.

The Art of Separation: Semantic Segmentation and Digital Masks

Once the AI understands the image, it performs the critical step: Semantic Segmentation. This is the technical term for the "pixel-level analysis" where the AI classifies every single pixel, assigning it to a specific category: "this pixel is part of the main subject" or "this pixel is part of the background."

This highly detailed classification results in the creation of what's called a "detailed mask"—the blueprint for the final separation. This digital "mask" is essentially a map where white represents the foreground to be kept and black represents the background to be discarded. The quality of this mask, specifically the precision of the line between black and white, is the single most important factor determining the quality of the final result.

The Analogy: The Perfect Stencil

Imagine an artist creating a perfect, impossibly detailed stencil. Instead of using scissors, they meticulously paint over the main subject in your photo with pure white paint and the entire background with pure black paint, following every curve with absolute precision. The final "mask" is this completed black-and-white stencil, which can then be laid over the original photo to perfectly isolate the subject.

Optimizing the Output: How to Help the AI Succeed

While the AI is powerful, its performance is heavily dependent on the quality of the input image. Providing a crisp, well-lit, high-resolution photo is crucial for the best results.

To help the AI succeed, it's best to utilize high-resolution images (at least 1024 x 768 pixels) and ensure optimal lighting. Good lighting creates a "clear separation between the subject and surroundings," and the difference is staggering: high-contrast visuals can achieve a success rate of up to 95%, while low-contrast visuals may only reach about 60%. Camera techniques like using a shallow depth of field can further help the AI by naturally blurring the background, boosting accuracy by up to 30%.

The Analogy: The Chef and Ingredients

Think of the AI as a world-class chef. You can have the most talented chef, but if you provide them with poor-quality ingredients, the final dish will be mediocre. Providing a high-quality photo is like giving them farm-fresh, premium ingredients, allowing them to perform at their absolute best.

The Walkthrough: A Photo's Journey Through the AI

To tie all these concepts together, let's follow a single photo on its journey through the entire background removal process. Our scenario: an online store owner needs to create a clean product listing for a new watch and uploads a photo of a person modeling it against a blurry office background.

- The Upload: The user navigates to the tool and uploads their high-resolution photo. From their perspective, the process has just begun. For the AI, the work is already underway.

- Image Analysis: Instantly, the AI discards the idea of a "person" or "watch." It sees only a grid of millions of pixels. It begins analyzing and grouping the data—the metallic sheen of the watch, the skin tones of the arm, and the muted colors of the out-of-focus office behind them.

- Subject Detection (The CNN at Work): Drawing on its deep learning models trained on millions of images, the powerful CNN springs into action. It confidently identifies the patterns corresponding to a "human figure" and a "common object" (the watch), paying special attention to the fine details like the negative space between the person's arm and their body.

- Edge Detection & Mask Generation: The AI now performs its pixel-level classification (Semantic Segmentation). Every pixel is assigned a label: person, watch, or background. This process generates a precise black-and-white mask that perfectly outlines the model and the product they are wearing.

- Isolation and Refinement: Using the mask as a guide, the system digitally removes every pixel labeled background, leaving the subject on a transparent layer. The AI then performs a final step and "refines the edges of the main object to ensure it remains neat and aesthetically pleasing."

- The Final Product: Seconds after the upload, the user sees the result: the model and watch, perfectly isolated. They can now place the subject on a clean white background, creating a professional e-commerce image.

The ELI5 Dictionary: Key Terms Demystified

To make things even clearer, here is a simple breakdown of the technical terms used in this article.

- Pixel

- The technical definition: The smallest unit of a digital image, a tiny dot of color that, when combined with millions of others, forms the complete picture.

- Think of it as... a single colored tile in a giant mosaic.

- Algorithm

- The technical definition: A set of rules or instructions given to a computer to solve a problem or perform a task.

- Think of it as... a recipe that the AI follows step-by-step to separate the subject from the background.

- Deep Learning Model

- The technical definition: A complex artificial intelligence system, inspired by the human brain, that learns to recognize patterns from massive amounts of data.

- Think of it as... a digital "brain" that has studied millions of photos to become an expert at identifying objects.

- Convolutional Neural Networks (CNNs)

- The technical definition: A specialized type of deep learning model designed specifically for processing and analyzing visual imagery.

- Think of it as... a specialist part of the AI's brain that is an expert in seeing and understanding pictures, especially fine details.

- Semantic Segmentation

- The technical definition: The process of classifying every pixel in an image to a specific category (e.g., person, car, sky) to create a detailed map of the image's contents.

- Think of it as... giving every single pixel a label, like sorting LEGO bricks by color and shape.

- Mask Generation

- The technical definition: The outcome of segmentation, where the AI produces a final black-and-white overlay that precisely defines the boundaries of the foreground object.

- Think of it as... creating a perfect digital stencil to cut out the main subject.

Conclusion: The Invisible Revolution in Image Editing

We have journeyed from a simple, relatable problem—a distracting background—deep into the mind of the machine that solves it. What appears to be a one-click action is revealed to be a sophisticated cascade of technologies, from low-level pixel analysis to the brain-like pattern recognition of deep learning models and the meticulous classification of semantic segmentation.

This technology represents a "pivotal advancement in image processing," one that truly "enhances the everyday lives of users." As artificial intelligence continues to evolve, these tools are doing more than just changing photo editing. They are demolishing old barriers between professional designers and everyday creators, fundamentally transforming creative workflows. The perfect picture, once held hostage by a distracting background and complex software, is now just one click away from freedom—a testament to the invisible revolution happening inside our devices.